The cables connecting neurons act like independent ‘mini-computers’ to store different types of information.

The brain’s rules seem simple: Fire together, wire together.

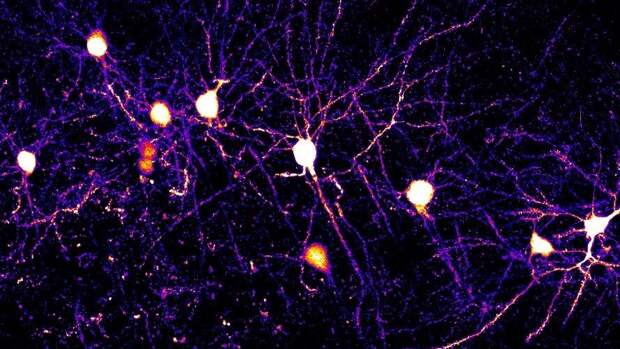

When groups of neurons activate, they become interconnected. This networking is how we learn, reason, form memories, and adapt to our world, and it’s made possible by synapses, tiny junctions dotting a neuron’s branches that receive and transmit input from other neurons.

Neurons have often been called the computational units of the brain. But more recent studies suggest that’s not the case. Their input cables, called dendrites, seem to run their own computations, and these alter the way neurons—and their associated networks—function.

A new study in Science sheds light on how these “mini-computers” work. A team from the University of California, San Diego watched as synapses lit up in a mouse’s brain while it learned a new motor skill. Depending on their location on a neuron’s dendrites, the synapses followed different rules. Some were keen to make local connections. Others formed longer circuits.

“Our research provides a clearer understanding of how synapses are being modified during learning,” said study author William “Jake” Wright in a press release.

The work offers a glimpse into how each neuron functions as it encodes memories. “The constant acquisition, storage, and retrieval of memories are among the most essential and fascinating features of the brain,” wrote Ayelén I. Groisman and Johannes J. Letzkus at the University of Freiburg in Germany, who were not involved in the study.

The results could provide insight into “offline learning,” such as when the brain etches fleeting memories into more permanent ones during sleep, a process we still don’t fully understand.

They could also inspire new AI methods. Most current brain-based algorithms treat each artificial neuron as a single entity with synapses following the same set of rules. Tweaking these rules could drive more sophisticated computation in mechanical brains.

A Neural Forest

Flip open a neuroscience textbook, and you’ll see a drawing of a neuron. The receiving end, the dendrite, looks like the dense branches of a tree. These branches funnel electrical signals into the body of the cell. Another branch relays outgoing messages to neighboring cells.

But neurons come in multiple shapes and sizes. Some stubby ones create local circuits using very short branches. Others, for example pyramidal cells, have long, sinewy dendrites that reach toward the top of the brain like broccolini. At the other end, they sprout bushes to gather input from deeper brain regions.

Dotted along all these branches are little hubs called synapses. Scientists have long known that synapses connect during learning. Here, synapses fine-tune their molecular docks so they’re more or less willing to network with neighboring synapses.

But how do synapses know what adjustments best contribute to the neuron’s overall activity? Most only capture local information, yet somehow, they unite to tweak the cell’s output. “When people talk about synaptic plasticity, it’s typically regarded as uniform within the brain,” said Wright. But learning initially occurs inside single synapses, each with its own personality.

Scientists have sought answer to this question—known as the credit assignment problem—by watching a handful of neurons in a dish or running simulations. But the neurons in these studies aren’t part of the brain-wide networks we use to learn, encode, and store memories, so they can’t capture how individual synapses contribute.

Double-Team

In the new study, researchers added genes to mice so they could monitor single synapses in the brain region involved in movement. They then trained the mice to press a lever for a watery treat.

Over two weeks, the team captured activity from pyramidal cells—the ones with long branches on one end and bushes on the other. Rather than only observing each neuron’s activity as a whole, the team also watched individual synapses along each dendrite.

They didn’t behave the same way. Synapses on the longer branch closer to the top of the brain—known as the apical dendrite—rapidly synced with neighbors. Their connections strengthened and formed a tighter network.

“This indicates that learning-related plasticity is governed by local interactions between nearby synaptic inputs in apical dendrites,” wrote Groisman and Letzkus.

By contrast, synapses on the bush-like basal dendrites mostly strengthened or weakened their connections in step with the neuron’s overall activity.

A neuron’s cell body—from which dendrites sprout—is also a computing machine. In another experiment, blocking the cell body’s action slashed signals from basal dendrites but not from apical dendrites. In other words, the neuron’s synapses functioned differently, depending on where they were. Some followed global activity in the cell; others cared more about local issues.

“This discovery fundamentally changes the way we understand how the brain solves the credit assignment problem, with the concept that individual neurons perform distinct computations in parallel in different subcellular compartments,” study senior author Takaki Komiyama said in the press release.

The work joins other efforts showcasing the brain’s complexity. Far from a unit of computation, a neuron’s branches can flexibly employ rules to encode memories.

This raises yet more questions.

The two dendrites—apical and basal—receive different types of information from different areas of the brain. The study’s techniques could help scientists hunt down and tease apart these differing network connections and, in turn, learn more about how we form new memories. Also mysterious are apical dendrites’ rogue synapses that are unaffected by signals from the cell body.

One theory suggests that independence from central control could allow “each dendritic branch to operate as an independent memory unit, greatly increasing the information storage capacity of single neurons,” wrote Groisman and Letzkus. These synapses could also be critical for “offline learning,” such as during sleep, when we build long-lasting memories.

The team is now studying how neurons use these different rules, and if they change in Alzheimer’s, autism, addiction, or post-traumatic disorders. The work could help us better understand what goes “goes wrong in these different diseases,” Wright said.

The post Each of the Brain’s Neurons Is Like Multiple Computers Running in Parallel appeared first on SingularityHub.